Radiochemical Genotoxicity Risk and Absorbed Dose

Christopher Busby*

Environmental Research SIA, 1117 Latvian Academy of Sciences, Riga, LV-1050, Latvia

- *Corresponding Author:

- Christopher Busby

Environmental Research SIA, 1117 Latvian Academy of Sciences, Riga, Latvia

Tel: +44 7989 428833

E-mail: christo@greenaudit.org

Received Date: 22 August 2017; Accepted Date: 07 September 2017; Published Date: 14 September 2017

Citation: Busby C (2017) Radiochemical Genotoxicity Risk and Absorbed Dose. Res Rep Toxi. Vol.1 No.1:1

Abstract

The primary mechanism of the biological effect of ionizing radiation has been known for more than 50 years: it is damage to DNA. In pharmaco-toxicological terms DNA is the “receptor” for radiation effects. Despite this knowledge, the current model for predicting or explaining health effects in populations exposed to internal exposures relies only upon quantifying radiation as average energy transfer to large masses of human tissue with no consideration whatever of the ionization density at the DNA relative to cytoplasm or non-DNA regions. This approach is equivalent to describing all chemical toxicological effects in terms of Mass and is clearly absurd.

The concept of Radiochemical Genotoxicity is presented whereby biochemical affinity of internal radionuclides for DNA confers an excess genetic hazard which can be assessed. The most directly measurable effects of radiation exposure are heritable effects detectable around birth. Data enabling the development of a risk coefficient for internal exposures to Uranium fissionproducts is already available. By directly employing a meta-analysis of more than 19 epidemiological studies of post-Chernobyl birth outcomes in 10 different countries affected by contamination from Chernobyl a generalized risk coefficient for heritable damage is obtained. It is shown that the dose response is biphasic due to death of the foetus before term. The resulting coefficient is 20 per mSv internal exposure. Application of the new factor to the radionuclide exposures occurring during the period of atmospheric test contamination predicts the increases in infant mortality reported in the literature. The philosophical and ethical aspects are briefly discussed together with an account of the legal position in Europe.

Keywords

Radiation; Heritable; Genetic; Dose-response; Chernobyl; Birth defect; Genotoxicity

Introduction

Since the beginning of the 20th Century it has been clear that exposure to ionizing radiation causes harmful biological effects which expressed as cell death, organ damage, individual death, and delayed effects which included heritable effects, lifespan shortening and cancer. Exposure to natural background radiation is inevitable. But since the contamination of the biosphere by novel radionuclides and increasing exposures from other sources the question is: how much exposure to ionizing radiation can be considered safe or acceptable? Following the important work on genetic chromosome effects in fruit flies exposed to X-rays by Herman Muller, by the 1950s it was clear that the biological effects of radiation exposure were mainly mediated through damage to, and alteration of, chromosomal and mitochondrial DNA [1] (Figure 1).

Figure 1: The 1946 Nobel prize for medicine was awarded to Herman J Müller for his discovery and subsequent work on the mutations caused by X-rays, effects which he discovered in 1926. By the 1950s Muller warned about the radioactive contamination being caused by the atmospheric nuclear tests. His warnings turned out to be accurate.

Although in the last twenty years downstream biological effects have been shown to be mediated by two indirect effects - genomic instability and bystander responses (a surprising discovery) - nothing has emerged which essentially alter the fact that the basis of the biological effects of ionizing radiation is damage to the DNA [2-4].

In the period of nuclear weapons development following the Second World War there was a rapid increase in the contamination of the biosphere with novel radionuclides resulting from the fission of Uranium and Plutonium in increasingly powerful atmospheric tests [5, 6].

Because the radioactive elements created by and used in such weapons and their development industries were exposing workers and also entering the food chain and being inhaled by members of the public the regulation of such exposures became a political necessity. Such regulation had to start with a decision about what was an acceptable level of health risk to human populations. This required two developments:

• A quantitative estimator of biological damage.

• An epidemiological or other way of relating specific harm like cancer, heritable damage, lifespan, infant mortality, congenital malformation.

The development of nuclear weapons, and the parallel development of nuclear energy took place in the period of the Cold War. Those in charge of the development of models to assess radiation effects were principally physicists who had developed methods for measuring ionizing radiation. These methods were very simple: they relied in various ways, and quite naturally (as it seemed to them) on the measurement of ionization in gases constrained in various types of apparatus. These were either ionization chambers (where gaseous conductivity was measured) or Geiger Mueller chambers (where ions were accelerated under high voltages and individual ion currents counted). Later there were to be developed scintillation counters, where flashes of light were amplified and counted. What these methods all had in common was that they obtained a measure of energy absorption per unit mass of material being irradiated [7]. It was then a simple step to the decision to quantify human radiation exposure as a measure of Energy absorbed per Unit Mass. This was called a “Dose” of radiation, or “Absorbed Dose”, defined nowadays as ΔE/AM, Joules per Kilogram or “Grays”. In the USA, the original and earlier unit the “Rad”, (“radiation absorbed dose” ergs per 100 g) still enjoys widespread usage. The clear excess radiobiological effectiveness of the densely ionizing short range alpha particle tracks forced the risk agencies to devise an arbitrary weighting factor of 20 to the absorbed dose thus creating a new quantity, Equivalent Dose, named the “Sievert” or in the USA the “rem”. The extension of this idea of weighting absorbed dose according to its genetic damage was not, however, extended further to other situations and exposures that carried excess genetic risk.

Thus, the dilution of energy into a kilogram of tissue is still in 2017 how all radiation exposure is quantified.

External and internal exposures

Having defined the exposure quantity Absorbed Dose and its weighted version Equivalent Dose, it remained necessary to connect these with the health effects. The source of this connection, which fed through to radiation exposure legislation, was the lifespan study (LSS) of Japanese survivors of the bombing of Hiroshima and Nagasaki in 1945. This began in 1952 when study groups were assembled on the basis of “dose” categories. In all there were about 85,000 individuals gathered into the study, which was carried out by the Atomic Bomb Casualty Commission (ABCC) and was funded by the US. Later, the organization changed and became the Radiation Effects Research Foundation (RERF) [8]. The LSS continues to this day and the health outcomes of different external absorbed doses from gamma rays and neutrons to individuals at different distances remains the basis of current understanding of radiation risk.

The organization which mainly defines this relationship is the International Commission on Radiological Protection, ICRP [9,10], which presents itself as an independent charitable body which began in the 1920s. However, the current manifestation began in 1952 as an international version of the USA National Council for Radiation Protection (NCRP), an agency created to regulate exposures during the development of nuclear weapons and nuclear energy. Both have been accused of bias toward the industry, by such eminent scientific experts as Muller [11], Gofman [12], Radford and by one of the founder developers of the new field of “Health Physics” associated with the regulation of exposures to radiation, Karl Z Morgan [13]. In the USA, a similar organization, BEIR exists, but this committee adheres to the general risk model of the ICRP in which all biological effects are based on the LSS studies.

The “science” of Health Physics rapidly developed into an organizational system with its own institutes, diplomas and examinations, creating individuals who were employed by all industries where radiation exposures of workers or members of the public occurred. In this way, historically, the relation between radiation exposures and health has become a scientific black box [14] which is accepted by governments all over the world to regulate radiation exposure and limit the health effects. The acceptable limits for radiation exposures were set by the ICRP and the other similar agencies and committees and adopted by governments and by the United Nations. They are currently based on a limit of 1 MilliSievert (mSv) absorbed dose per year, which the ICRP argued gives the probability of 1 in 1 million extra deaths following the exposure.

In this historical development of a radiation risk model, a very serious mistake was made right at the beginning. This mistake is a simple one to illustrate, and there are many epidemiological studies which support its existence and confirm it. The problem may have arisen because the question was put into the hands of physicists, and not examined by chemists, toxicologists, pharmacologists, physiologists or biologists Or rather, when it was, their advice was dismissed or ignored [12,13].

By 1959, when the consequences of internal exposures to fallout from the atmospheric nuclear testing began to appear as infant mortality and child leukemia, the study of radiation effects was taken from the doctors of the World Health Organization (WHO), and put in the hands of the physicists at the International Atomic Energy Agency (IAEA) where it remains. By 1970, all the questions had been reduced to doctrine; all the doctrine simplified to catechism and all the catechism promulgated by the Health Physics Society whose members dutifully passed examinations, obtained diplomas and administered the rites wherever radiation exposures were controlled. I return to the philosophical and political dimension.

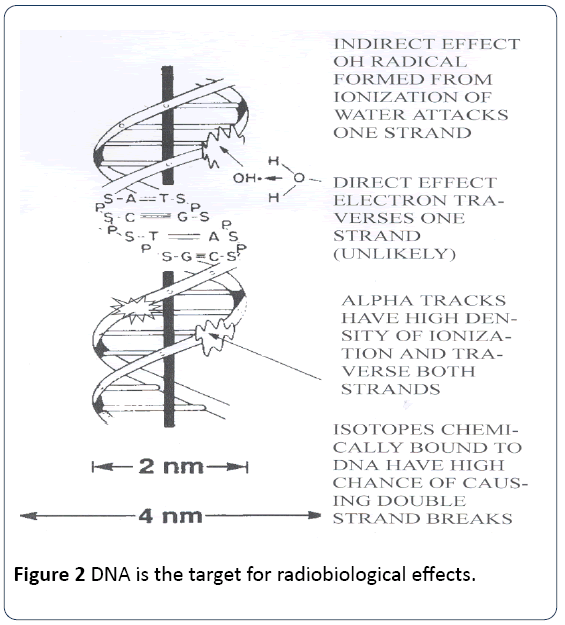

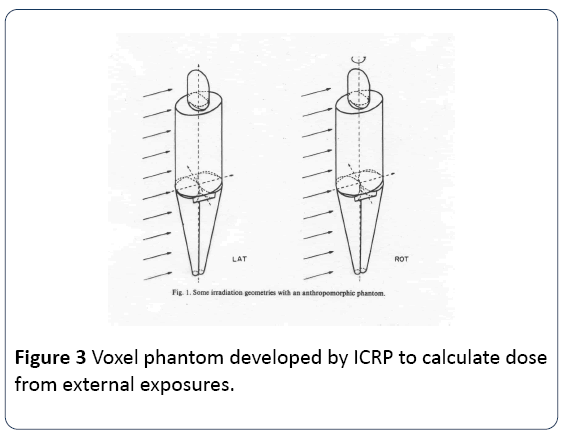

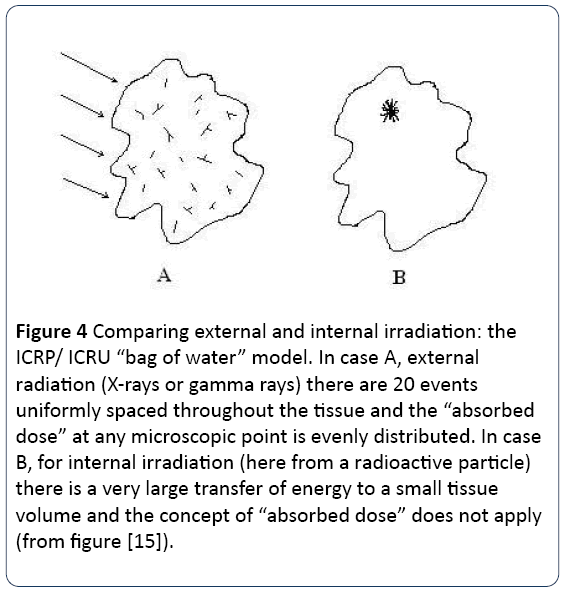

The error which was introduced involved averaging ionizing radiation energy density over large volumes of tissue in a situation where it is now clear, and was from the beginning in 1952, that the target for radiation effects was very specific: the DNA Figure 2. Thus, most of the energy was wasted in volumes of the body where no DNA existed. This is illustrated by Figures 3 and 4 which show an ICRP radiobiological phantom, employed for calculation of absorbed dose, and the variation in ionization track density that can result from inhomogeneous exposure to a radioactive particle.

Figure 4: Comparing external and internal irradiation: the ICRP/ ICRU “bag of water” model. In case A, external radiation (X-rays or gamma rays) there are 20 events uniformly spaced throughout the tissue and the “absorbed dose” at any microscopic point is evenly distributed. In case B, for internal irradiation (here from a radioactive particle) there is a very large transfer of energy to a small tissue volume and the concept of “absorbed dose” does not apply (from figure [15]).

Radiochemical genotoxicity

I will now develop this homogeneity issue which highlights the difference between quantifying hazard from internal and external exposures. The external dose approach was wrongly extended to deal with internal exposures. This is equivalent to employing dose as mass (in grams) as a predictor of chemical toxicity irrespective of the pharmacological agent. This is the source of the mistake made in choosing “absorbed dose” as a measure of biological damage. This error has been the cause of tens of millions of deaths and the introduction to the human genome of significant harmful mutations [3]. Absorbed dose is a colligative quantity. Like temperature and pressure, it specifies the mean value over a large mass of material. It says nothing about the situation at the molecular level, where the harmful effects occur. In the case of ionizing radiation, the molecular level is the ionization energy density in the DNA. Of course, for external exposures, the mean energy density at any position in a homogenous tissue mass is everywhere the same, so is a good approximation to the ionization energy density at the DNA.

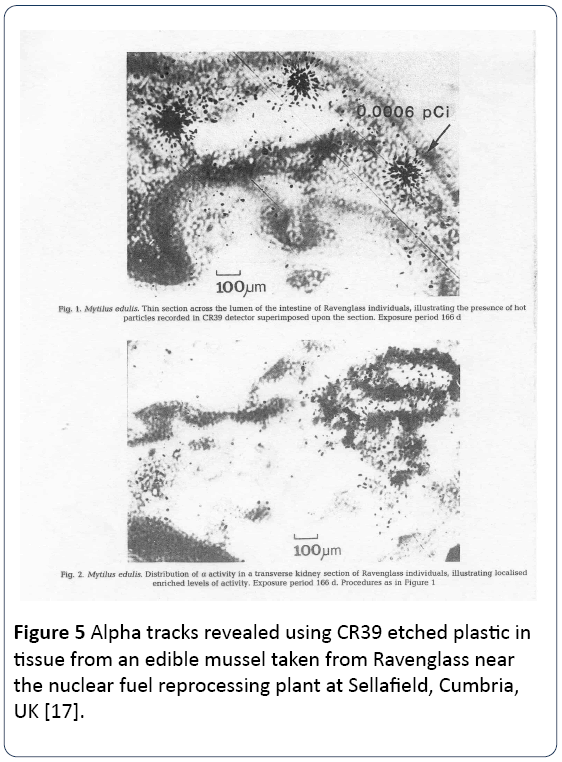

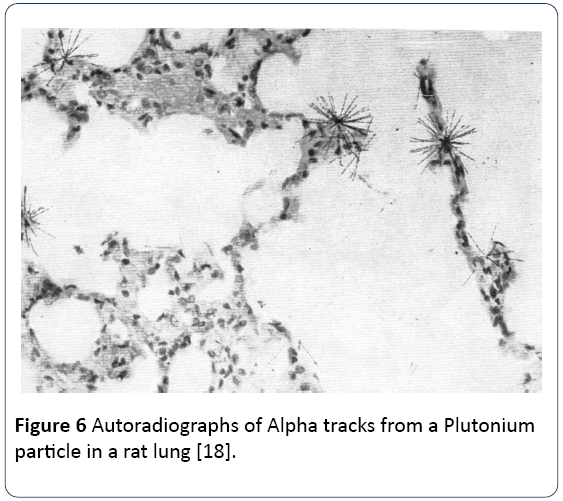

Epidemiological studies of patients exposed to external irradiation, like the ankylosing spondylitis studies [16] are likely to give a reasonable estimate of downstream cancer excess risk. But this could never be true for internal exposures, and it is hard to see how it was ever thought it could. However, the method that was developed by Committee 2 of the ICRP in 1952 to quantify dose from internal radionuclides was exactly the same as the external dose approach. The decays from the internal radionuclides located in any specified organ were energy summed in Joules, and the result divided by the organ mass in kilograms. It was the mean energy per unit mass that defined the dose. For many real exposures (Chernobyl, Fukushima, Depleted Uranium, Fuel reprocessing plants) exposures are from inhaled and translocated micron sized particles or from radionuclides (like Strontium-90 and Uranium-238) which have significant chemical affinity for DNA. Figure 5 shows alpha emissions from a particle within an edible mussel myrtilis edulis contaminated with Plutonium-239 from the Sellafield discharges to the Irish Sea, and Figure 6 shows an autoradiograph of a Plutonium particle in a rat lung. These are examples of inhomogeneity of ionization density. The current model assumes that the ionization density is everywhere the same in tissue.

The word used: “dose”, implied a measured amount of material, analogous to pharmacological or toxicological doses of chemical compounds, and this gave the system a spurious logic. But a dose of aspirin or vincristine or busulphan or sodium cyanide is not at all analogous to a dose of radiation. The modes of action of a pharmacological agent depend upon the dose in grams given by mouth or injection or inhalation reaching the active site at sufficient concentration to exert its effect. The pharmacological dose to the patient or animal must take account of detoxification, metabolism, excretion, and transport to those parts of the body where the active site occurs. The biological effect may even be a consequence of the activity of a metabolite. The anti-cancer DNA mutation agents like busulphan, the nitrogen mustards etc. and indeed the powerful chemical carcinogens like the benzanthrenes and aflatoxins specifically target the DNA. What if we were to design a DNA-seeking molecule which was also radioactive? What would we expect its genotoxicity to be?

The same transport processes occur for radiochemical agents as for pharmacological ones. Because radionuclides are, at base, chemical elements, they behave in organisms in ways that are constrained by their chemistry. They are not uniformly dispersed and therefore neither is their decay energy. They cause discriminate or targeted damage. External radiation is not targeted, it is an indiscriminate agent. But internal exposure to radionuclides can be, depending upon the chemical radioactive element, a discriminate or targeting agent.

It is true that the whole organ dispersion in the body is allowed for by the current radiation model. Thus Iodine-131, a major release from nuclear accidents, is concentrated in the thyroid and in the blood. The model allows for this extra concentration in the thyroid (though, interestingly, not the blood) and the absorbed dose to the thyroid is increased accordingly. But the organ level is where this approach stops. It is at the molecular level that it should be, but is not, applied. Since the DNA is the acknowledged target for radiation effects, we should be concerned about the ionization energy density (or dose) at the DNA. Effects involving at the same time, chemical DNA affinity of radionuclides which then decay to produce local ionizing radiation effects which I will term radiochemical genotoxicity. The radiochemical genotoxicity of a given internal exposure can be several orders of magnitude greater than the organ absorbed dose as calculated by Health Physics. There are theoretical and epidemiological indicators for this [15].

Enhanced genotoxicity of internal radionuclides

The issue of internal radionuclide effects was raised by the European Committee in Radiation Risk (ECRR) in 2003, 2009 and 2010 [19,20].

The ECRR is an independent committee of scientists formed in Brussels in 1998 specifically to address the issue of internal exposure effects and increasing evidence for large errors in the description and modelling of internal radiation exposures in the system of the ICRP. In the ECRR model which was published in 2003, internal radionuclides doses were calculated in the usual way, as energy per unit mass, but the results were weighted according to estimates of the enhancement of ionization at the DNA expected on the basis of chemical and physical considerations of the chemical affinity of the element for the DNA and other qualities of both radionuclides and internal radionuclide decay processes. These were in turn derived ad hoc from considerations of the chemistry and known decay characteristics of the element and its manifestations. Results were checked against epidemiological data on cancer in groups exposed to internal fission-products, for example the population of Wales and England where similar socio-genetic types had been differentially exposed due to rainfall and measured differentials in Sr-90 contamination [21-23].

The quantity resulting from the weighting of internal radionuclides will be termed the genetic dose in a forthcoming report of the ECRR [24]. The unit Müller (Mü) for Genetic Dose Equivalent was recently suggested by the sub-committee on Units and Measurements of the International Foundation on Research on Radioactivity Risk in Stockholm and will be adopted in 2017 by the ECRR main committee. Herman Müller, mentioned earlier, was the Nobel Prize winning discoverer of the genetic effects of ionizing radiation and warned in 1950-52 (contentiously at the time, though accurately, as it turned out) of the serious genetic damage that atmospheric atomic testing would introduce to the human race.

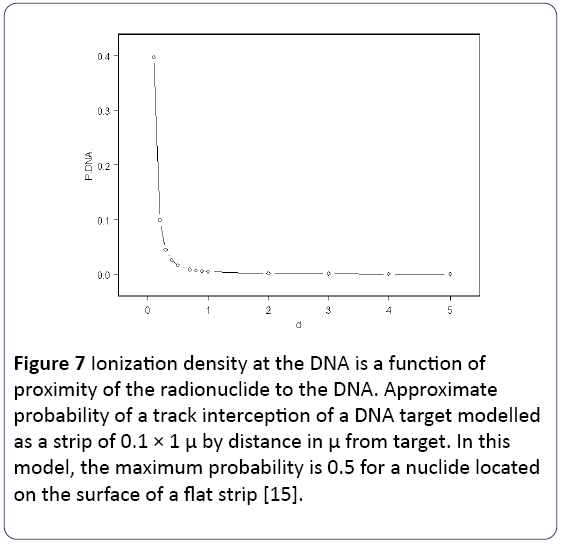

Such internal radionuclide effects had been the subject of little research effort in the years following the 2nd world war, but some laboratory studies had been carried out, principally in the Soviet Union. However, from 1975 on, epidemiological studies began to report significant health effects in a number of populations exposed to internal radionuclides at doses which seemed far too low to possibly be the cause of the effects, if the ICRP Health Physics approach to dose calculation was employed. I will return to epidemiological studies. The issue of internal radionuclide effects was reviewed in 2013 [15]. Here evidence was presented from Soviet and other researchers that the biological effects of internal exposures could be much greater than the dose calculations predicted and discussed the ways in which chemical affinity could increase the local ionization at the DNA. It listed a number of internal radionuclide exposure studies which supported enhancements of genotoxicity for fission-product exposures of upwards of 300-fold. It presented a mathematical derivation of the probability of a decay track from a local radionuclide hitting a DNA strand by distance of the radionuclide from the DNA. The result is shown in Figure 7.

Figure 7: Ionization density at the DNA is a function of proximity of the radionuclide to the DNA. Approximate probability of a track interception of a DNA target modelled as a strip of 0.1 × 1 μ by distance in μ from target. In this model, the maximum probability is 0.5 for a nuclide located on the surface of a flat strip [15].

Regarding health effects in living systems, the contamination from human activity likely to be of concern resolves itself into a small number of specific isotopes which the ECRR has singled out as representing hazards which arise from DNA binding or DNA damage enhancement effects. Some major nuclides of concern are listed in Table 1 below.

| Nuclide | Half life | Combined Weighting Factor |

|---|---|---|

| H-3 | -- | 20 |

| Sr-90 | -- | 400 |

| Ba-140 | -- | 600 |

| Ra-226 | -- | 20 |

| U-238 | -- | 1000* |

| U-234 | -- | 1000* |

| U-235 | -- | 1000* |

| *inhalation | ||

Table 1: Some important environmental nuclides exhibiting radiochemical genotoxicity with provisional hazard weighting factors for human exposures (from ECRR2010). These weighting factors are currently under review and an ECRR report on the ECRR hazard coefficients for calculation of the Genetic Dose Equivalent is in preparation.

A simple calculation of the enhancement factor

The main target, DNA in the cell nucleus, represents a very small fraction of the total material in the cell [25]. In a 10 μ diameter cell (mass 520 pg) there is 6 pg of DNA made up of 2.4 pg bases, 2.3 pg deoxyribose, 1.2 pg phosphate. In addition, associated with this macromolecule are 3.1 pg of bound water and 4.2 pg of inner hydration water [16]. Since absorbed dose is given as Joules per kilogram, if it were possible to accurately target the DNA complex alone, a dose to the cell (mass 520 pg) of 1 milliJoule per kilogram (one milliGray, one milliSievert) would, if absorbed only by the DNA complex (6 pg), represent a dose of 520/6=87 mSv to the DNA. It is possible to imagine the DNA as an organ of the body, like the thyroid gland or the breast. If this is done, then there should be a weighting factor for its radiobiological sensitivity of 87 which would be based on spatial distribution of dose alone. Of course, for external photon irradiation, to a first approximation, tracks are generated at random in tissue.

Therefore only a small proportion of these tracks will intercept the DNA but the interception will be mainly uniform, and the health effects from such external exposure may be assumed to be described by the averaging approach of “absorbed dose”. One simple way to illustrate this spatial effect is merely to consider the human body as two compartments, an organ A which may be called “DNA” and one B which may be called “everything else”. The current ICRP risk model calculates the absorbed dose of any internal exposure by dividing the total decay energy by the mass. This would not distinguish between compartments A and B; both would receive the same dose.

Table 1 Some important environmental nuclides exhibiting radiochemical genotoxicity with provisional hazard weighting factors for human exposures (from ECRR2010). These weighting factors are currently under review and an ECRR report on the ECRR hazard coefficients for calculation of the Genetic Dose Equivalent is in preparation.

But as far as cancer is concerned (or other consequences of genetic damage) all the ionisation in compartment B is wasted. It has no effect. Therefore it is the dose to compartment A that is the cause of the effect. This would suggest that the spatial enhancement is at minimum ratio MassB/MassA or about

Epidemiological evidence from Chernobyl— Calculating the risk coefficient for heritable effects

The relationship between exposure, quantified as Absorbed Dose (Grays/ Sieverts, Rads/ Rems) has historically been tied to the cancer and genetic effects measured in the study of the survivors of the Atomic bombing of Hiroshima and Nagasaki in 1945. In this Life Span Study (LSS), groups of individuals were recruited in 1952 (some 7 years after the bombing) and their cancer and any heritable effects in children recorded and related to the estimated Absorbed Doses they received. The dose estimates were based on calculations relating to the individual’s distance from the hypocentre of the detonation and experiments made on similar bombs exploded in the Nevada Desert in the USA.

The risk coefficients derived from this study, which is ongoing, are provided by the ICRP and form the basis of legal constraints on exposure. However, the LSS doses are all external doses, and do not include any estimate of internal exposures to fallout and rainout from the bomb components which contaminated the areas where all the high, medium and low dose groups were situated. In addition, there was a nodose group, the Not in City group who came to the towns some months after the bombs and who lived in the fallout contaminated areas. The principal internal exposures were to Uranium and Plutonium components of the weapons themselves.

The issue of the total failure of the LSS to deal with internal exposures was raised recently in an invited letter to the Journal Genetics [26], where it was argued that since the LSS was silent on the heritable effects of internal exposures; other studies were needed to determine these risks.

How could such risks be determined? What evidence could be employed to formulate accurate risk coefficients? What effect should such risk coefficients have on legal limits and on the societal regulations? The Chernobyl accident in 1986 represented a very important event with regard to examining the heritable and other effects of exposures to internal fissionproduct and Uranium contamination of environments [27]. In 2017 it is now clear that following from Chernobyl, a large number of epidemiological studies of heritable effects in contaminated areas of Europe and of countries as far away as Egypt and Turkey reported significant increases in almost all congenital malformations, genetic defects and heritable conditions including infant leukemia.

These results in newborn babies and children are also pointers to effects in later life, since, it is now universally accepted that cancer and a wide range of adverse health effects follow from genetic and genomic damage. A 2016 review of the heritable effects of exposures to internal fissionproduct contamination, which majored on Chernobyl effects, showed clearly that internal exposures to as low as 1 mSv (calculated as Absorbed Dose) could cause significant observable excess risks in babies and children [28]. Reference was made to some 20 or more studies published in the peerreview literature by different groups of researchers in different countries. The risk coefficient derived from these combined studies gave a doubling dose for heritable effects of at least 10 mSv, and the analysis showed clearly that the dose-response was non-linear, for reasons given in the paper. The ICRP’s doubling dose for heritable effects of around 1000 mSv or more, was derived from mice because the LSS apparently did not show any heritable effects in humans. Reasons for this error were discussed in the 2016 review [28].

The Chernobyl heritable damage results enable the heritable effects of internal exposures to the baby to be assessed more accurately and safely than any previous study. This is because, unlike the Japanese LSS studies, birth data is available continuously through the period of contamination and exposure. The Japanese LSS studies, apart from having excluded internal exposures from the picture and having abandoned the non-exposed controls, began some 7 years after the exposure event. All that is necessary to obtain a risk coefficient for internal exposure is to have currently accepted estimates of the dose to the infant from shortly before conception to term. Such estimates for the various countries where birth defect outcomes were studied have been published by a number of authorities including the United Nations. In the case of some studies internal doses were measured and reported.

As discussed in [28] the dose response is predicted to be biphasic, for the reason that above a certain levels of contamination exposure, the baby dies before term. Therefore the heritable damage risk coefficient deduced from a metaanalysis is applicable up to the point that the baby dies in utero or even, due to sperm or egg damage, fails to implant or develop at all.

Table 2 lists the studies of heritable effects found in the Chernobyl affected territories and reported by different groups and employed here to calculate the new risk coefficient.

| Study | Region | a Dose mSv | Excess Risk | ERR/mSv |

|---|---|---|---|---|

| Hoffmann [29] | Turkey, Bulgaria, Croatia,Germany, Belarus, Finland, Norway, Eurocat registries | 0.1 0.5 |

>0.2 | 2 0.4 |

| Dolk et al. [30] | 16 Eurocat registries | 0.03-0.7 | b No effect reported | 0.0 |

| UNSCEAR [31] |

Review of literature to 2006 | 0.03- 1.0 | b No effect reported | 0.0 |

| Lazjuk [32] | Belarus (legal abortuses) Not teratogenic effects |

6.7 0.44 |

0.81 0.49 |

0.12 0.23 |

| Feschenko et al.[33] | Belarus (chromosome aberrations) | 6.7 c control |

0.39 0.09 |

0.06 0.09 |

| Bogdanovitch[34] Savchenko [34] |

Belarus (all malformations) | 10 (5 high dose regions) |

2 5.6 |

0.2 0.56 |

| Kulakov et al. [35] | Belarus high dose area Ukraine high dose areas All malformations |

10 10 |

3 2 |

0.3 0.2 |

| Petrova et al.[36] | Belarus various dose areas All malformations |

10 8 3 1 |

1.5 1.3 1.2 1.1 |

0.15 0.16 0.25 1.1 |

| Wertelecki [37,38] | Ukraine Polissia region Neural Tube defects (NTD) Microcephaly Micropthalmos |

d 26 is 12-year internal dose; one year internal dose is ~2mSv |

1.59 1.85 3.03 |

0.8 1.1 1.5 |

| Akar et al. [39] | Turkey (NTD) Turkey (anencephalus) N= 90,000 |

0.5 0.5 |

5.4 5 |

10.8 10.0 |

| Caglayan et al.[40] | Aegean Turkey (NTD) N= 19,115 |

0.5 | 4.7 | 9.4 |

| Guvenc et al.[41] | Eastern Turkey (NTD) N= 5240 |

0.5 | 4.0 | 8.0 |

| Mocan et al [42] | Eastern Black Sea (NTD) N= 40,997 |

0.5 | 3.4 | 6.8 |

| Moumdiev et al. [43] | Bulgaria Major malformations |

0.8 | Significant increases | >1 |

| Kruslin et al.[44] | Croatia CA autopsies N=3451 |

0.5 | Increased frequency | >1 |

| Zieglowski et al. [45] | Germany ex GDR Cleft lip and palate CLP |

0.3 | 0.09 | 0.3 |

| Scherb et al. [46] | Germany Bavaria CLP |

0.3 | 0.086 | 0.3 |

| West Berlin government[47] | Germany: West Berlin Malformations in stillbirths at autopsy |

0.2 | 2.02 | 10.0 |

| Lotz et al. [48] | Germany: City of Jena | 0.2 | 4.1 | 20.5 |

| ICRP [9] | All heritable effects in humans (based on mice) | 1000 | 0.02 | 0.00002 |

| a Doses were either taken from the paper or estimated on the basis of UN reports or calculated from the reported levels of area contamination by Cs-137 using FGR12 (Part 2) and the computer program “Microshield”. b No effect was accepted because there was no continuous dose response found in the levels of exposure. Recalculation of the Dolk 1999 paper in reference [ref] gave a significant effect when comparing high dose and low dose groups. c The Belarus control areas were, of course, contaminated to a greater level than the exposed groups in distant countries where effects were reported, thus their status as controls is questionable. d Where the cumulative dose over T years to the parent is given, it is a 1 year dose which is tabulated here calculated by simple division by T. |

||||

Table 2: Studies of congenital malformation and other heritable effects reported in populations exposed to Chernobyl contamination with estimated or reported 1-year dose equivalents to mothers, Excess Relative Risks (ERR) per mSv (ICRP)

Listed also are the estimated or reported absorbed dose to the parents, the excess relative risk (ERR) and the Excess Relative Risk per mSv resulting from the study. The current relative risk factor for heritable damage adopted by the ICRP and UNSCEAR of 0.02 per Sievert (0.002% per mSv) is also tabulated.

Schmitz-Feuerhake et al. [28] also cite other studies which demonstrate heritable effects at low doses. Of interest are the studies of atomic test veterans which show 8 to 10-fold excess risk of major congenital conditions in both children and grandchildren at doses which were recently calculated [49,50] to be around the 1mSv level. However, for developing a risk coefficient for heritable effects from internal exposure, the values in Table 2 suffice. It is accepted that these values suffer from a degree of uncertainty. First, they are based on external exposures; but the activity levels of internal exposures are second order, given the risk coefficients for such exposure published by the ICRP. Second, the doses given in Column 3 are estimates of the dose to the parent for 1 year before the birth of the child. In the cases where cumulative doses were estimated, these were reduced to a 1 year dose by simple division. Third, the different studies often examined different congenital malformations, and therefore it is not strictly accurate to employ all of these results to establish a “risk factor” for “heritable disease”. Fifthly, and quite relevant, is that some of these studies were of legal abortuses, highlighting the issue which will now be addressed, the dose response.

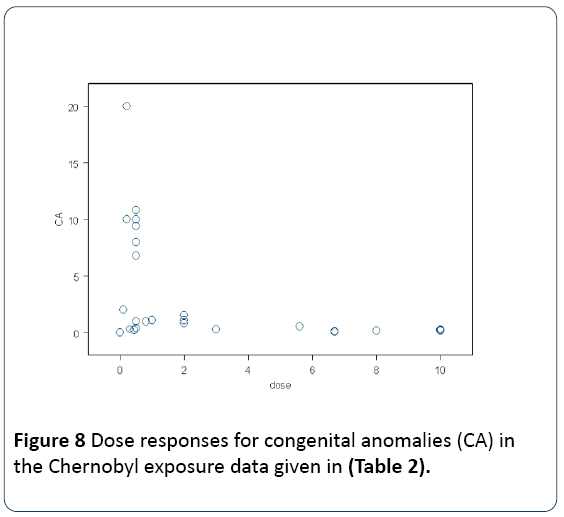

For if the internal exposures caused the deaths of the child in utero, clearly these cases cannot be measured in studies of congenital anomaly at or shortly after birth. This problem was addressed in the Schmitz-Feuerhake et al. paper [28]. The dose response for congenital malformation is clearly biphasic. After a certain level of dose to the genome, from the irradiation of the gametes through the foetus to the birth the individual is killed. Therefore after a certain level of dose, the heritable effect disappears. The dose-response is biphasic. This can clearly be seen in Figure 8 below where the data on Table 2 are plotted. The same effect is seen in data from the same study published by Koerblein [28,51].

The risk coefficient for heritable disease following internal exposures to mixed fissionproducts.

This information in Table 2 and Figure 8 is now converted into a risk coefficient. The ICRP risk coefficient for heritable illnesses, given in Table 2, and published in 2007 is 0.02 per Sievert. This translates to a doubling dose of 50 Sieverts and a risk per mSv of 0.00002. Since whole body doses of 5 Sieverts are fatal, it is clear that ICRP, on the basis of the Japanese LSS result, does not concede that heritable radiation effects in humans exist. Schmitz-Feuerhake et al. [28] chose a doubling dose of 10mSv but pointed out that effects which clearly occurred at doses lower than 1 mSv (conventionally calculated) pointed to a much higher risk coefficient.

In Table 3, the doses are estimated in mSv. The doseresponse relation given in Figure 2 suggests an excess relative risk of about 10 at a dose of about 0.5mSv. That would give a risk coefficient of 20 per mSv. Thus, in these low-dose circumstances, the error in the ICRP risk coefficient is truly enormous. If quantified, it becomes 20/0.00002=1 million. Such a level of error in the current model would accommodate all the observations of child leukemia near nuclear sites. It is the enormous disparity between calculated doses and epidemiological observations in the nuclear site studies which has caused the scientific community to avoid assigning causation: no one can believe that the error can be so great. Yet the evidence from Chernobyl is massive and unambiguous. It is clear that the correct risk factor for congenital malformation following internal exposure of the parent in the year prior to the birth is 20 per mSv. Do we have any evidence as to which is the main nuclide(s) responsible? The answer is yes, there is such evidence already in the literature [52]. One major component appears to be inhaled Uranium. Studies of those populations exposed to Uranium weapons in Iraq have shown clearly the very low doses of inhalation exposures to Uranium nanoparticles cause very significant increases in congenital malformation rates.

| EU State | Contact Person (s) | Result |

|---|---|---|

| United Kingdom | EURATOM Justification Authority; Contact: Department for Business, Energy and Industrial Strategy Whitehall Place, London SW1A 2AW justification_application_centre@beis.gov.uk Public Health England (PHE) COMAREa |

Request sent in November 2016. Acknowledgement promised a response in March 2017. Response from Matt Clarke of Department for Business, Energy and Industrial Strategy based on advice from PHE stating that there was no new and important evidence.Richard Bramhall replied stating that Matt Clarke had failed to address the evidence. No reply so far. COMARE Chair Chris Gibson also did not address the evidence but rather referred to earlier reports which are irrelevant. |

| Republic of Ireland | Contact Person National Contact Point: LEHANE Michéal (Director) | Letter was sent in Jan 2017. Measured response was made by Ciara McMahon agreeing that the area of internal radiation effects was a legitimate concern but stated that not enough evidence had been available generally. Referred to CERRIE. Did not refer to the evidence sent in terms of New and Important Evidence. A reply to this asking for a specific response to the Chernobyl heritable effects evidence has not been responded to. |

| EPA/ORP (Environmental Protection Agency/Office of Radiological Protection) | ||

| Sweden | EURATOM contact: HASSEL Fredrik SSMb |

Initial refusal to address this was followed by a visit to the SSM in Stockholm. When finally cornered |

| Swedish Environment Ministry | ||

| Swedish Justice Chancellor | Hassel wrote that it was not the responsibility of SSM or his personal responsibility to initiate any re-justification on the basis of new and important evidence. Did not address the evidence. He stated that this matter was in the responsibility of the ICRP. His refusal was reported in a letter to the Justice Chancellor and to the Swedish Environment Ministry. A letter from the Environment Ministry stated that it has all confidence in the SSM. | |

| France | EURATOM contact National Contact Point: CHEVET Pierre-Franck (Chairman) Reply from Jean-Luc Lachaume ASN (French Nuclear Safety Authority) www.asn.fr |

Initial reply stated that formal response would be made. A response appeared in May in which it was stated that the Justification process was the responsibility of the company which caused the exposures. This is quite incorrect. |

| Denmark | EURATOM contact ØHlenschlaegerMette |

Both initial replies and responses to further letters stated that the issue is one for the ICRP and not for the Danish National Competent Authority |

| Germany | EURATOM contact GREIPL Christian (Head of Directorate Radiological Protection) |

No reply has been received. |

| BfSc | ||

| a Committee on Medical Aspects of Radiation in the Environment b Swedish Radiological Protection Authority c German Radiological Protection Authority |

||

Table 3: Responses of the National Competent Authority EURATOM designated legal contact and also other State actors to requests from individuals in Member States to re-Justify radiation exposures under Article 6.2 of the EURATOM 96/29 Directive.

In these scenarios, there were no other internal radioactive exposures. In addition to increases in congenital effects at birth, there were very high levels of cancer and leukemia. In one study, the leukemia rate in young people was more than 30 times the control level in Egypt [53]. These high rates resulted in the report being attacked as absurd. But similar “absurd” effects have been appearing in many studies of those exposed to internal radionuclides, particularly Uranium. And Uranium, as the Uranyl ion, binds strongly to DNA and has other properties that ensure targeting of the very material that is the origin of the genetic and genomic effects which result from radiation exposure.

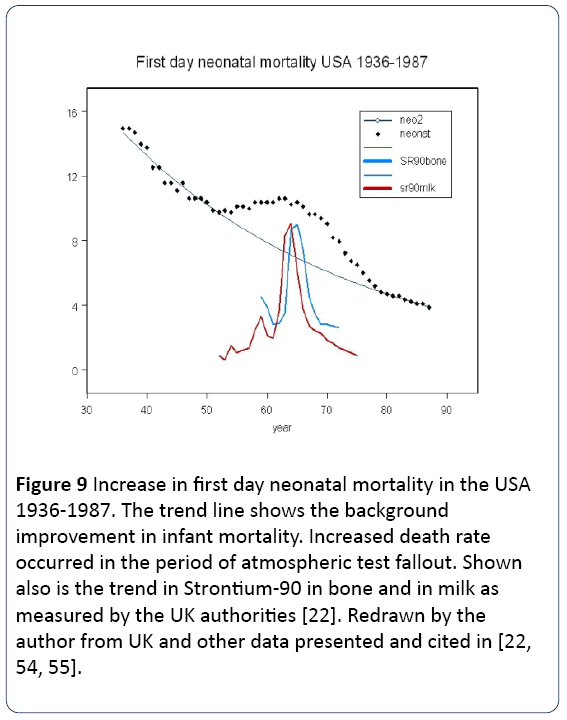

There are some important consequences. If this risk coefficient is correct, then we should see effects from historic exposures which occurred before Chernobyl. The most clear of these is the increase in infant mortality seen at the time of the atmospheric nuclear testing. It is clear from the dose— response relation shown in Figure 2 that above a dose of about 1 mSv foetal or infant death ensues. Figure 9 is a plot of first day infant mortality rate in the USA from 1934 through the period of atmospheric testing.

Figure 9: Increase in first day neonatal mortality in the USA 1936-1987. The trend line shows the background improvement in infant mortality. Increased death rate occurred in the period of atmospheric test fallout. Shown also is the trend in Strontium-90 in bone and in milk as measured by the UK authorities [22]. Redrawn by the author from UK and other data presented and cited in [22, 54, 55].

The mortality data are from Whyte [54] who followed Sternglass [55] in addressing this anomaly. Also plotted is the trend level of Strontium-90 in milk and in children’s bones, measured in the UK. According to UNSCEAR, the dose over the period of the main contamination 1959-1963 was about 1 mSv. If we say that the 1 year dose was 0.5 mSv it is clear that there was a 25% increase in 1st day mortality. This gives a coefficient of 0.5 per mSv, in the same range as those reported for the Chernobyl effects. For example, Lazjuk [32] looking at the low dose region of all Belarus, found almost exactly this risk coefficient in legal abortuses.

Philosophical, political and legal dimensions

It would appear that Müller had been right to warn of the genetic effects of exposure to the fallout from weapons tests. The massive evidence for the genetic effects of internal exposures can be found if looked for. But hypnotized by the absorbed dose model, constrained by employment and scientific culture, no one looked. When evidence appeared, viewed through the prism of the scientific Health Physics model it was discarded as being impossible.

Thus, according to the 2010 model of the ECRR, tens of millions of infants have died, more have not been born, and the exposures have created a cancer epidemic which is now revealing itself in the children of those born in the peak fallout years. What can be done about this? Clearly it is politically and philosophically wrong to permit a process which results in the deaths of a significant fraction of the population. The ICRP draws this line at a 1 mSv exposure which it believes causes one death from cancer 1 million individuals exposed.

The evidence reviewed here and in Schmitz-Feuerhake is rather that a 1 mSv internal exposure can double the rate of congenital malformations and over the fallout period 1959-63 caused about a 15% increase in infant mortality in the N hemisphere (ECRR2010).

The Law in Europe is the EURATOM 96/29 Basic Safety Standards Directive of 13th May 1996, transposed into all EU Member States by May 2000 [56]. The Directive is thus Law in each member state. The Directive accepts that there are harmful effects of exposures but states clearly that any such exposures have to be “Justified” In Article 6.2 it states:

“Existing classes or types of practice may be reviewed as to Justification whenever new and important evidence about their efficacy or consequences is acquired.”

Following enquiries of the European Commission made by UK Green Party European Parliamentarian Caroline Lucas in 2009 it was stated clearly that any requirement for re- Justifying radiation exposures must be carried out firstly in Member States where the laws were the responsibility of the EURATOM contact person in the State’s National Competent Authority. From December 2016 individual citizens of the UK, the Republic of Ireland, Sweden, France, Germany and Denmark wrote to the legal EURATOM contact person in their country the following letter:

Justification of radiation exposures of members of the public and workers: Review of existing practices

New and important information

EURATOM Contact

National Competent Authority

State

Dear Sir/Madam,

1. This request requires the re-justification of historic and currently on-going practices involving exposures of members of the public and workers to ionizing radiation principally from radionuclide contamination of the environment.

2. Under Article 6.2 of the Council Directive 96/29/Euratom of 13 May 1996:

Existing classes or types of practice may be reviewed as to Justification whenever new and important evidence about their efficacy or consequences is acquired

3. Under Article 19(2) of the Council Directive 2013/59 of 5th Dec 2013:

Member States shall consider a review of existing classes or types of practices with regard to their justification whenever there is new and important evidence about their efficacy or potential consequences.

The letter went on to outline the evidence reviewed above, in particular the increases in heritable effects in populations exposed to Chernobyl contamination outlined above and reviewed in the 2016 Schmitz-Feuerhake et al. paper [28]. Some results of this exercise are listed in Table 3 below.

Now it is clear from the responses received from those individuals written to that they accept that they have to write something in reply: it is the law. But what is also clear is that they have no intention of carrying out any re-justification. The responses fall into three categories.

(Sweden, Denmark) It is not the responsibility of the State to adjust the basis of radiation protection law if new scientific discoveries show that the law is unsafe and is not protecting the public. It is somehow the responsibility of the ICRP to do this.

(UK and Ireland) It is the responsibility of the State to rejustify exposures on the basis of new and important evidence, but the relevant authorities will not address the evidence or will dismiss it by referring to other selective or irrelevant information.

(France) It is the responsibility not of the State but of the polluting companies to justify any exposures.

Now response (a) above is just not true. The ICRP has no democratic authority whatever: it is a charity that advises on radiation risk, and in this it is little different from the ECRR. Neither is it the companies as in (c) above.

It is the National Competent Authority of the Member State that has to examine the new and important evidence and act on it. Some of the countries took this latter position but refused to act. It is now not my intention to argue here whether the evidence I have outlined is accurate or not. But it might have been possible for those individuals and agencies addressed to provide critical analysis of all the studies reviewed in Schmitz-Feuerhake [28] arguing, for example, that the studies were unsafe for whatever reason, or that they had been carried out badly, or that the data was suspect. But none of the EURATOM designated individuals and National Competent Authorities addressed the evidence at all. It is hard to see how they could have, given the number of studies and the wide degree of agreement between them in the many different countries where they were carried out. Thus it is clear to all that there is a distinct possibility, indeed probability, that the new evidence from Chernobyl (and from other studies reviewed in the Schmitz-Feuerhake et al. review) indicates that as a result of perhaps understandable historical mistakes resulting from early science, the current radiation protection regime has allowed and continues to allow, serious genetic and genomic damage to the human population. What can be done to force the evidence of this into the political and legal domain?

Conclusion

Ethical, philosophical and political dimensions

It is clearly unacceptable for any Society to permit processes which create contamination that causes illness or death in its citizens. Nevertheless, there are many activities and processes which are of value to Society as a whole but which are known to cause harmful effects. Laws are then developed to balance such harm against advantages to Society as a whole.

This process is based on the Utilitarian or cost-benefit philosophy of Jeremy Bentham and John Stuart Mill. The issue, as it pertains to radiation protection is reviewed in ECRR2003 and ECRR2010 where injustices of utilitarianism and the alternative “human rights” approaches are discussed [3]. The ICRP specifically refers to a utilitarian approach in its publications [9,10] where it suggests that a death rate of one in 1 million exposed individuals may be considered acceptable to society. This is a clear Utilitarian decision, and (although the mathematical derivation is a matter of question) is the stated basis for an annual dose limit of 1 mSv, adopted by the EURATOM BSS also. However, it is clear that the new and important evidence provided by the Chernobyl heritable effects lead to a birth defect rate, and of course excess infant death rate which is or the order of 50% following an internal exposure of 1 mSv. Such exposures occur and have occurred in many scenarios; and infant deaths have also been recorded after such exposures. A very recent example is the study of infant mortality associated with exposure to Radium following gas well development (fracking) in Pennsylvania [57]. The history of science has been full of major changes in scientific models. But none of these, from Galileo, Newton, Einstein, etc. can have had quite the public health impact as the revelation that internal radionuclide exposures are so genotoxic and that the model employed to quantify these exposures is totally unsafe. Politicians and radiation risk agencies and experts are now caught between human health and economic (nuclear energy, fracking) and military (nuclear weapons, depleted uranium) projects which depend upon permitting radioactive contamination. And it seems that the public or well-meaning networks of independent experts are powerless to change this or to trigger the legal processes available to stop it happening despite the real new and important evidence that the current system of radiological protection is killing people.

References

- Muller HJ (1950) Radiation damage to the genetic material. AmSci 38: 32-39 and 38: 399-425.

- BEIR VII (2006) Health risks of exposure to low levels of ionizing radiation. NAS.

- Busby C, Yablolov AV, Schmitz Feuerhake I, Bertell R, Scott-Cato M (2010) ECRR2010 The 2010 Recommendations of the European Committee on Radiation Risk. The Health Effects of Ionizing Radiation at Low Doses and Low Dose Rates. Brussels: ECRR; Aberystwyth Green Audit.

- UNSCEAR (2000) Sources and effects of ionizing radiation. United Nations Scientific Committee on the Effects of Atomic Radiation.

- Eisenbud M, Gesell T (1997)Environmental radioactivity from natural, industrial and military sources, (4th edn), Academic Press, San Diego, USA.

- NCRP (1987) Exposure of the population of the United States and Canada from Natural Background radiation. Report No 45..

- L’Annunciata MF (1998) Handbook of radioactivity Analysis (1st edn), Academic Press, San Diego, USA.

- RERF http: //www.rerf.jp/index_e.html

- ICRP(2007) The 2007 Recommendations of the International Commission on Radiological Protection. Ann ICRP 37: 2- 4.

- ICRP (1991) Recommendations of the International Commission on Radiological Protection. Ann. ICRP 21: 1-3.

- Carlson EA (1981) Genes, radiation, and society: The life and work of H. J. Muller. Ithaca, Cornell University Press,NY, USA.

- Gofman JW (1990) Radiation-induced Cancer from Low-dose Exposure: An Independent Analysis, CNR, San Francisco.

- Karl ZM, Ken MP(1999) The angry genie. One man’s walk through the nuclear age. University of Oklahoma Press, Norman, USA.

- Bruno L(1981) Science in Action, Harvard University Press, Cambridge,USA.

- Christopher B(2013). Aspects of DNA Damage from Internal Radionuclides: Prof. Clark Chen, New Research Directions in DNA Repair, InTech, Rijeka, Croatia.

- Brown WM, Doll R (1965) Mortality from cancer and other causes after radiotherapy for ankylosing spondylitis. BMJ 2: 1327-32

- UK National Radiological Protection Board (2010)Photographs from Popplewell DS.

- IRSN (2010) Photographfrom French Nuclear Protection Agency.

- Busby C, Bertell R, Yablokov A, Feuerhake IZ, Scott-Cato M (2003) ECRR2003: 2003 Recommendations of the European Committee on radiation risk- The health effects of ionizing radiation at low dose--Regulator's edition. J. Radiol. Prot 32: 369-72

- Busby C, Yablolov AV, Schmitz-Feuerhake I, Bertell R,Scott-Cato M (2010) ECRR2010 The 2010 Recommendations of the European Committee on Radiation Risk. The health effects of ionizing radiation at low doses and low dose rates. J. Radiol. Prot 32: 369-72

- Busby C (1994) Excess of other cancers in Wales Unexplained BMJ 308: 268.

- Busby C (1995) Wings of death: Nuclear Pollution and Human Health. Stat. Med. 16: 2025-26

- Busby C (2006) Wolves of water. A study constructed from atomic radiation, morality, epidemiology, science, bias, philosophy and death. (4th edn), Green Audit Books, Aberystwyth, UK.

- ECRR (2018) The 2018 Recommendations of the European Committee on Radiation Risk. The health effects of ionizing radiation at low doses and low dose rates. Brussels: ECRR; Aberystwyth Green Audit, UK. In Preparation.

- BEIR V (1990) Health effects of exposure to low levels of ionizing radiation. NAS, Washington D.C., USA.

- Busby C (2016) Invited letter to the editor onThe Hiroshima Nagasaki survivor studies. Discrepancies between results and general perception. By Bernard R Jordan(Ed).Genetics. Genet 204: 1627-29

- Yablokov AV, Nesterenko W, Nesterenko A (2009) Chernobyl: Consequences of the catastrophe in the Environment. Ann New York Acad. Sci 1181: 5-30.

- FeuerhakeS, Busby C, Flugbeil P(2016) Genetic radiation risks-A neglected topic in the low dose debate. Environ Health Toxicol 31: e2016001.

- Hoffmann W (2001) Fallout from chernobyl nuclear disaster and congenital malformations in Europe. Arch Environ Health 56: 478- 484.

- Dolk H, Nichols R (1999) Evaluation of the impact of chernobyl on the prevalence of congenital anomalies in 16 regions of Europe. EUROCAT Working Group. Int J Epidemiol 28: 941-48.

- UNSCEAR (2006) Effects of ionizing radiation. United Nations Scientific Committee on the effects of atomic radiation.

- Lazjuk GI, Nikolaev DL, Novikova I V(2009) Changes in registered congenital anomalies in the Republic of Belarus after the Chernobyl accident. Stem Cell 15: 255-260

- Feshchenko SP, Schröder HC, Müller WE, Lazjuk GI(2002) Congenital malformations among newborns and developmental abnormalities among human embryos in Belarus after Chernobyl accident. Cell MolBiol 48: 423-26.

- Bogdanovich IP (1997). Comparative analysis of the death rate of children, aged 0-5, in 1994 in radio contaminated and conventionally clean areas of Belarus. In: Medico-biological effects and the ways of overcoming the Chernobyl accident consequence.

- Kulakov VI, Sokur TN, Volobuev AI, Tzibulskaya IS, Malisheva VA, et al.(1993) Female reproductive function in areas affected by radiation after the Chernobyl power station accident. Environ Health Perspect 101: 117-123.

- Petrova A, Gnedko T, Maistrova I, Zafranskaya M, Dainiak N(1997). Morbidity in a large cohort study of children bornto mothers exposed to radiation from Chernobyl. Stem Cells 15: 141-50.

- Wertelecki W (2010) Malformations in a chernobyl-impacted region. Pediatrics 125: e836-e843.

- Wertelecki W, Yevtushok L, Zymak-Zakutnia N, Wang B, Sosyniuk Z, et al.(2014) Blastopathies and microcephaly in a Chernobyl-impacted region of Ukraine. CongenitAnom 54: 125-149.

- Akar N, Ata Y, Aytekin AF (1989). Neural tube defects and Chernobyl? PaediatrPerinatEpidemiol 3: 102-103.

- Caglayan S, KayhanB, Menteşoğlu S, Aksit S (1989) Changing incidence of neural tube defects in Aegean Turkey. PaediatrPerinatEpidemiol 3 : 62-5.

- Güvenc H, Uslu MA, Güvenc M, Ozekici U, Kocabay K,et al. (1993) Changing trend of neural tube defects in eastern Turkey. J Epidemiol Community Health 47: 40-41.

- Mocan H, Bozkaya H, Mocan MZ, Furtun EM(1990) Changing incidence of anencephaly in the eastern Black Sea region of Turkey and Chernobyl. PaediatrPerinatEpidemiol 4 : 264-68.

- Moumdjiev N, Nedkova V, Christova V, Kostova S (1992) Influence of the Chernobyl reactor accident on the child health in the region of Pleven, Bulgaria. In: International Pediatric Association. Excerpts from the 20th International Congress of Pediatrics, Brazil.

- Kruslin B, Jukić S, Kos M, Simić G, Cviko A(1998) Congenital anomalies of the central nervous system at autopsy in Croatia in the period before and after the Chernobyl accident. Acta Med Croatica 52: 103-107.

- Zieglowski V, Hemprich A (1999) Facial cleft birth rate in former East Germany before and after the reactor accident in Chernobyl. Mund Kiefer Gesichtschir 3 : 195-99.

- Scherb H, Weigelt E (2004) Cleft lip and cleft palate birth rate in Bavaria before and after the Chernobyl nuclear power plant accident. Mund Kiefer Gesichtschir 8: 106-110.

- Government of Berlin West(1987) Annual health report. Section of Health and Social Affairs.

- Lotz B, Haerting J, Schulze E (1996) Changes in fetal and childhood autopsies in the region of Jena after the Chernobyl accident, Germany. [cited 2016 Jan 28].

- Busby C, DeMessieres M (2014) Miscarriages and congenital conditions in offspring of the British Nuclear Atmospheric test Program. Epidemiology (Sunnyvale) 4: 4.

- SithB(2016) Evidence presented in the British Nuclear Test Veterans Royal Courts of Justice Appeals: Secretary of State for Defence.

- Korblein A (2004) Abnormalities in Bavaria after Chernobyl. Strahlentelex 416-417: 4-6

- Christopher B(2015) Editorial: Uranium epidemiology. J EpidemiolPrev Med.1: 009

- Busby C, HamdanP,EntesarM(2010) Cancer, infant mortality and birth sex-ratio in Fallujah, Iraq 2005–2009. Int J Environ Res Public Health 7: 2828-2837.

- Whyte RK (1992) `First Day Neonatal Mortality since 1935: A Re-examination of the Cross Hypothesis’. BMJ 304: 343-6.

- Sternglass EJ (1971), Environmental Radiation and Human Health in Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability (6th edn), University Press, Berkeley, California, USA.

- Euratom 96/29 Basic Safety Standards Directive.

- Busby C, Joseph JM(2017). There’s a world going on underground—Infant mortality and fracking in Pennsylvania. J Environ Prot 8: 381-393.

Open Access Journals

- Aquaculture & Veterinary Science

- Chemistry & Chemical Sciences

- Clinical Sciences

- Engineering

- General Science

- Genetics & Molecular Biology

- Health Care & Nursing

- Immunology & Microbiology

- Materials Science

- Mathematics & Physics

- Medical Sciences

- Neurology & Psychiatry

- Oncology & Cancer Science

- Pharmaceutical Sciences