ISSN : 2349-3917

American Journal of Computer Science and Information Technology

Implementation of the Guidance Control Mechanism Using Image Recognition in CanSat

Aman Raj*

Department of Computer Science and Engineering, Netaji Subhas University of Technology, New Delhi, India

- *Corresponding Author:

- Aman Raj

Department of Computer Science and Engineering,

Netaji Subhas University of Technology,

New Delhi,

India

Tel: 916204269328

E-mail: amanraj95vs@gmail.com

Received date: March 19, 2023, Manuscript No. IPACSIT-23-16126; Editor assigned date: March 22, 2023, PreQC No. IPACSIT-23-16126 (PQ); Reviewed date: April 06, 2023, QC No. IPACSIT-23-16126; Revised date: May 20, 2023, Manuscript No. IPACSIT-23-16126 (R); Published date: May 30, 2023, DOI: 10.36648/2349-3917.11.6.001

Citation: Raj A (2023) Implementation of the Guidance Control Mechanism Using Image Recognition in CanSat. Am J Compt Sci Inform Technol Vol: 11 No:6.

Abstract

The CanSat competition organized by NASA has become increasingly popular as a means to showcase planetary exploration rovers. The competition involves teams developing small autonomous rovers for planetary exploration and competing to reach a designated goal. In this study, the authors present a method for controlling the CanSat using image recognition to guide it towards the goal. The method suggested involves identifying the color of the goal pylon, and the authors were able to use this approach to reach the goal from a distance of 0 meters during the CanSat competition 2022.

Keywords

Image recognition; IOT; CanSat NASA; RGB; Temperature

Introduction

The CanSat competitions have become increasingly popular as of late, involving demonstration experiments of planetary exploration rovers. The author of this paper have participated in CanSat contests organised by NASA and the Indian government since 2018 [1]. In these competitions, teams must design planetary exploration rovers that are fully autonomous and can operate in a manner that simulates real space development. The rovers are required to approach the target position closely without any external guidance or touching the CanSat during the competition [2]. The CanSats are released from the sky by a rocket or balloon and rely on GPS position information for autonomous guidance control, using a parachute to land.

However, GPS has a positioning error of several meters, making it difficult for CanSats to be guided accurately to the goal position. Hence, the authors suggest an approach that utilizes a camera image for identifying the red pylon placed at the goal position and directing the CanSat to reach within a distance of 0 m. This approach was successfully demonstrated in the CanSat contest 2022, where the CanSat was guided and controlled to reach a distance of 0 m from the goal, thereby proving the efficacy of this method.

Materials and Methods

Investigation of techniques for recognizing goal-images

The goal of the CanSat project is to identify a red pylon using a camera image, with limitations on the size and weight of the computer used. The Raspberry Pi Zero is the highest performance computer that can be used. The image recognition method must be able to identify the goal in real time with a limited amount of computation. The study investigates different methods of image recognition, such as color binary, template matching, SIFT/SURF and deep learning image classification, to recognize the goal image in the CanSat project [3]. Table 1 assesses these methods qualitatively and indicates that deep learning is not practical due to its long computation time. Template matching, SIFT and SURF do not have such limitations but face difficulty recognizing the goal as they approach it since the camera only captures a part of the goal. Therefore, the color binary method is preferred for image recognition, which has three types of color spaces, RGB, YCrCb and HSV, defining the color range. Table 2 evaluates each color space qualitatively, indicating that RGB is not suitable for this application because it is hard to handle changes in brightness and color temperature. YCrCb and HSV are more appropriate because Y and H parameters express brightness and two-dimensional Cr, Cb, or S, V parameters express color. YCrCb is selected as it is more resistant to changes in ambient light and easier to adjust according to experimental results [4].

| Computation time | Accuracy | Recognition by goal partial image | Distance to the goal | |

|---|---|---|---|---|

| Color binary | Short | High | Possible | Possible |

| Template machining | Short | Low | Impossible | Impossible |

| SIFT, SURT | Medium | Medium | Impossible | Impossible |

| Deep learning | Long | High | Possible | Possible |

Table 1: The qualitative assessment of image recognition methods.

| Dimension for specifying color range | Brightness dimension | |

|---|---|---|

| RGB | 3 | 3 |

| YCrCb | 2 | 1 |

| HSV | 2 | 1 |

Table 2: The qualitative assessment of the color space.

Image recognition method

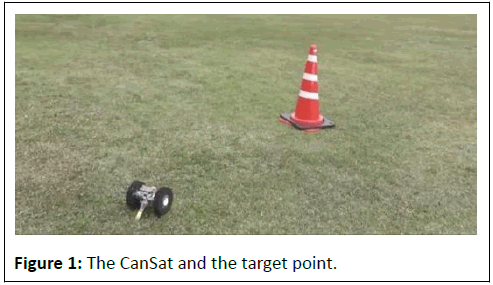

This passage describes a process for detecting a red pylon goal in an image captured by a Raspberry Pi camera to detect the red goal pylon; the Figure 1 was transformed from the RGB color space to the YCrCb color space. A binary image is then created by identifying pixels in the YCrCb color space that fall within the range of red for the goal pylon. The barycentric coordinates the directions on either side of the goal are determined from the binary image [5]. Additionally, the distance to the goal is calculated based on the number of pixels. The percentage of pixels in the binary image that correspond to the red pylon varied based on the distance between the camera and the goal during experiments [6]. Only 0.1% of the pixels were in the binary image at a distance of 5 meters, while 35% of the pixels were in the image at a distance of 10 centimeters. To account for the possibility that only a portion of the red pylon may be recognized, the CanSat system considered the goal to be detected when binary image contained 0.08% red color or greater.

Cansat method for controlling guidance using image recognition

When CanSat needs to cover a long distance to reach the goal, it relies on GPS position information for guidance [7]. Once it gets within 1.4 meters of the goal, when the goal guidance system of CanSat cannot identify the goal through the camera, the system shifts to image recognition. In such cases, CanSat makes a left turn of approximately 30 degrees until it can identify the target. When the goal is identified, CanSat sets the coordinates of the center of mass of the identified binary image in relation to its orientation and moves towards it for 2 seconds before stopping and turning the steering wheel in that direction. The CanSat continues the process until the goal recognition pixels reach a threshold of 35% or greater [8]. At this point, it recognizes the point at which the goal has been achieved and the process comes to a halt. If the camera cannot recognize the goal after several attempts, CanSat switches back to GPS guidance control and continues for 40 seconds. If the goal is recognized during this time, once the search for the goal is activated, if it is not discovered within 40 seconds, the CanSat will halt and switch to goal guidance control.

Results and Discussion

Assessment by the panel

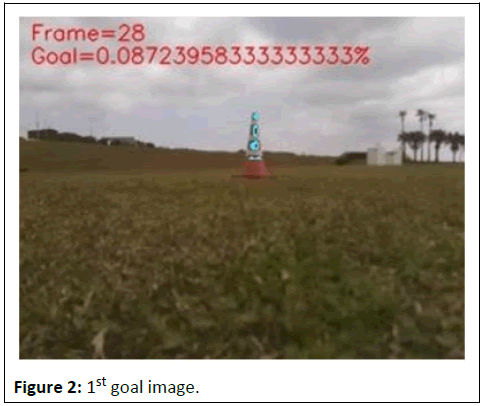

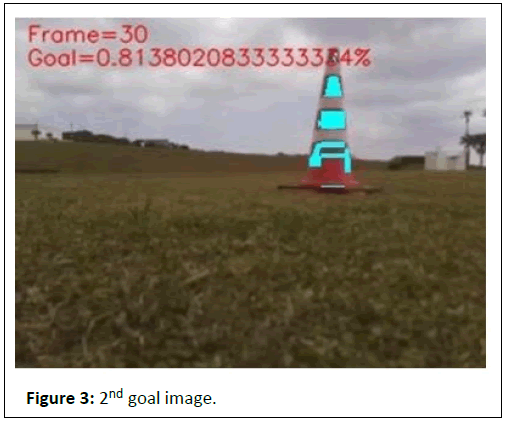

The following section presents the findings/outcomes of the experiment on goal image guidance control conducted in CANSAT 2022. The CanSat moved from its landing spot with the help of GPS guidance control and stopped about 4 meters away from the goal. The CanSat then employed image recognition for guidance control towards the goal point. The cyan color in Figure 2, indicates the goal identified by the CanSat. At that time, the CanSat was almost 4 meters away from the goal and the goal was identified by detecting red pixels only 0.09% [9]. The CanSat moved directly towards the objective located at the center of the picture and came to a halt after 2 seconds, roughly 2 meters away from the goal. The CanSat was able to take a picture of the goal, as shown in Figure 3, and about 0.8% of the pixels in the image identified the color of the goal is red. The CanSat detected that the goal objective was positioned on the right hand side of the image, so it turned to the right and moved for another 2 seconds before stopping again.

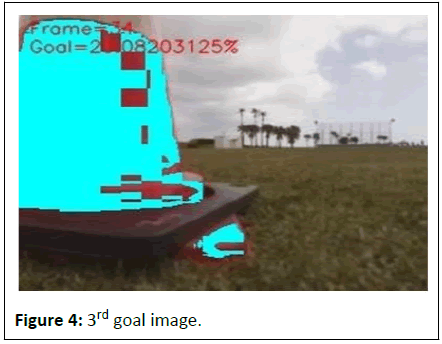

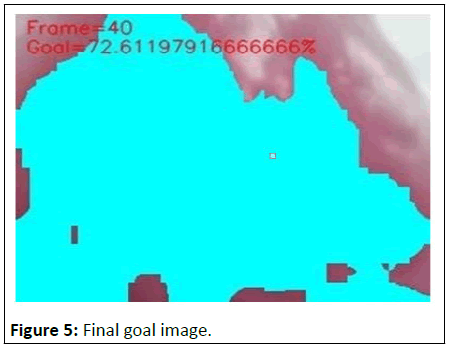

CanSat was able to approach the goal at a distance of about 50 cm. An image was captured by the camera, as shown in Figure 4, and the objective was identified when around 23% of the pixels on the left side of the screen were recognized as red. As a result, CanSat made a left turn and moved for 2 seconds, leading to the goal being approached at a distance of 0 meters [10]. After that, the camera captured another image, as shown in Figure 5, and the goal was recognized with approximately 72% red recognition pixels. After achieving its objective, CanSat was brought to a halt. As a result, CanSat's guidance was successfully controlled until the desired distance is reached of 0 m at CanSat 2022.

Conclusion

To address the problem of inaccuracies in GPS positioning during CanSat competitions. A method that employs camera images for goal recognition and guidance control was proposed and evaluated through experiments in the competition. In the CanSat contest 2022's demonstration experiment, the goal of 0 meters was successfully achieved, confirming the effectiveness of the proposed method.

However, dealing with changes in the natural lighting conditions, including sunny or cloudy skies. Conditions, can be challenging, and recognition of the goal is not possible beyond a distance of 5 meters. To overcome these challenges, in the future, there will be an exploration of new methods for the identification of visuals, such as through the use of deep learning, with an emphasis on reducing the amount of time required for computation.

References

- Saito T, Akiyama M (2019) Development of rover with ARLISS requirements and the examination of the rate of acceleration that causes damages during a rocket launch. J Robot Mechatron 31:913-925

- Contente J, Galvao C (2022) STEM education and problem-solving in space science: A case study with CanSat. Educ Sci 12:251

- Akiyama M, Ninomiya H, Saito T (2023) Method to achieve high speed and high recognition rate of goal from long distance for CanSat. J Robot Mechatron 35:194-205

- Akiyama M, Saito T (2021) A novel method for goal recognition from 10 m distance using deep learning in CanSat. J Robot Mechatron 33:1359-1372

- Nakasuka S, Nakamura Y, Funase R, Nagai M, Kawashima R (2004) Autonomous parafoil control experiment as “comeback competition” for effective first step training towards satellite development. IFAC Proc Vol 37:919-924

- Wijaya IG, Uchimura K, Koutaki G (2013) Face recognition based on incremental predictive linear discriminant analysis. IEEJ IEICE Trans Inf Syst 133:74-83

- Li N, Song X (2021) Study of cognitive state recognition and assistive system for overall reading of foreign literature based on intelligent sensors. J Sen 2021:1-9

- Gao X, Jin L (2012) A vision-based fast chinese postal envelope identification system. J Inf Sci Eng 28:31-49

- Sugio T, Inui T (2000) Perceptual independence between identity and orientation in shape recognition. Cog Sci 7:275-291

- Bhattarai S, Go JS, Oh HU (2021) Experimental CanSat platform for functional verification of burn wire triggering-based holding and release mechanisms. Aerospace 8:192

Open Access Journals

- Aquaculture & Veterinary Science

- Chemistry & Chemical Sciences

- Clinical Sciences

- Engineering

- General Science

- Genetics & Molecular Biology

- Health Care & Nursing

- Immunology & Microbiology

- Materials Science

- Mathematics & Physics

- Medical Sciences

- Neurology & Psychiatry

- Oncology & Cancer Science

- Pharmaceutical Sciences